… when You can’t use InMemory

We read more and more on temporary tables and table variables, and the pro/cons of one over the other, and also some myths have been explained ( …both are on the tempdb ) ; to make it short there are a couple of pro in favor of temp tables:

-

Have statistics, while for table variables (also InMemory) SQL Server always estimates 1 row.

-

Are accessible from stored procedures called inside the one that created the temp table.

Point 1 become negligible in case of objects with few rows, while in case of a great number of rows we can use:

-

OPTION (RECOMPILE), but particular attention may be needed in case of several calls (also every second), because You may spend more CPU resources in continuously recompile the statements instead of run them.

-

TRACE FLAG 2453, but the setting is server wider, so the risk is to fall back on the situation mentioned above.

Given this let’s look at a more “practical” approach to this comparison, which basically take into account two fundamental key points for the TempDb performance when dealing with “High Workload Scenarios”:

-

PAGELATCH contention, EX and SH, on PFS pages ( 1 and every 8088 pages, 64Mb ), GAM and SGAM ( 2 and 3, every 511230 pages, 4Gb).

-

TempDb Tlog traffic, created by Log records, which translates into MB/s.

To show the differences I’ll use two stored procedures, one which creates a temporary table and the other which creates a table variable, inserting 5 rows each; their structure and average rows is very similar to that of our environments. An Extended Events session and a query over the TempDb Tlog will show the differences.

CREATE PROCEDURE [dbo].[proc_TestTemp] AS BEGIN SET NOCOUNT ON CREATE TABLE #Table1( [Fld1] [bigint] NULL, [Fld2] [int] NULL, [Fld3] [int] NULL, [Fld4] [tinyint] NULL, [Fld5] [decimal](9, 2) NULL, [Fld6] [decimal](9, 2) NULL, [Fld7] [tinyint] NULL, [Fld8] [varchar](15) , INDEX [IX_Fld1] CLUSTERED ([Fld1] ASC)) INSERT INTO #Table1 VALUES (1,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO #Table1 VALUES (10,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO #Table1 VALUES (100,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO #Table1 VALUES (1000,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO #Table1 VALUES (1000,100000,200000,35,9.20,9.20, 3, 'Test') END GO CREATE PROCEDURE [dbo].[proc_TestVar] AS BEGIN SET NOCOUNT ON DECLARE @Table1 TABLE ( [Fld1] [bigint] NULL, [Fld2] [int] NULL, [Fld3] [int] NULL, [Fld4] [tinyint] NULL, [Fld5] [decimal](9, 2) NULL, [Fld6] [decimal](9, 2) NULL, [Fld7] [tinyint] NULL, [Fld8] [varchar](15), INDEX [IX_Fld1] CLUSTERED ([Fld1] ASC)) INSERT INTO @Table1 VALUES (1,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO @Table1 VALUES (10,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO @Table1 VALUES (100,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO @Table1 VALUES (1000,100000,200000,35,9.20,9.20, 3, 'Test') INSERT INTO @Table1 VALUES (1000,100000,200000,35,9.20,9.20, 3, 'Test') END GO

The Extended Event session uses the sqlserver.latch_acquired event filtered for dbid 2 and the session from which I’m running the stored procedures.

CREATE EVENT SESSION [Latch] ON SERVER ADD EVENT sqlserver.latch_acquired( ACTION(sqlserver.session_id,sqlserver.sql_text) WHERE ([package0].[equal_uint64]([database_id],(2)) AND [sqlserver].[session_id]=(57))) ADD TARGET package0.event_file(SET filename=N'Latch'), ADD TARGET package0.ring_buffer(SET max_memory=(40960)) WITH (MAX_MEMORY=4096 KB,EVENT_RETENTION_MODE=ALLOW_SINGLE_EVENT_LOSS, MAX_DISPATCH_LATENCY=1 SECONDS, MAX_EVENT_SIZE=0 KB, MEMORY_PARTITION_MODE=NONE,TRACK_CAUSALITY=OFF,STARTUP_STATE=OFF) GO

While the Tlog query is something like

SELECT

fd.[Current LSN],

fd.Operation,

fd.AllocUnitName,

fd.[Transaction Name],

fd.[Transaction ID]

FROM sys.fn_dblog(NULL, NULL) AS fd

Putting all together and issuing a checkpoint before starting let’s check the results with the Temp Table…

USE tempdb

GO

CHECKPOINT

GO

ALTER EVENT SESSION Latch ON SERVER STATE = start

GO

exec [TestDb].[dbo].[proc_TestTemp]

GO

ALTER EVENT SESSION Latch ON SERVER STATE = stop

GO

SELECT

fd.[Current LSN],

fd.Operation,

fd.AllocUnitName,

fd.[Transaction Name],

fd.[Transaction ID]

FROM sys.fn_dblog(NULL, NULL) AS fd

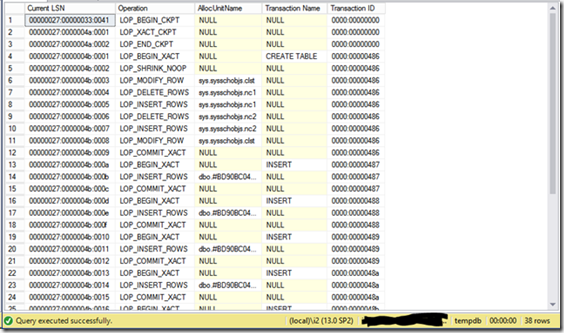

The Tlog query tells that there are 38 log records (138 at the first execution)

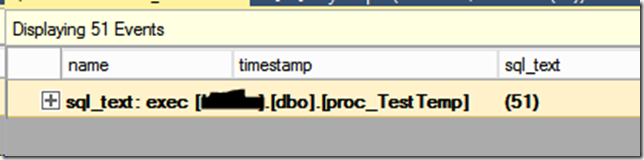

The Extended Events session show a total of 51 acquired Latches

Let’s remove the just created files and repeat the same test with the Table Variable.

This time the query over the Tlog is telling that the number of records is 23 (240 at the first execution, meaning that caching a table variable creates more records)

The session shows now a total of 11 acquired Latches.

Basically we have that Table Variables require much less Latch and Tlog records than Temp Tables, a condition that in case of thousands of calls per minute could make a great difference. If we change the Tlog to extract the SUM ([Log Record Lenght]) we find that the sp using the Temp Table writes 5552 bytes, while the other 2632 bytes.

Let’s check now what happens on the Performance Counters “Log Flushes/sec” and “Log Bytes Flushed/sec” of the “Databases” object, tempdb instance; for the purpose I used the SQL Load Generator to generate (only) around 130 Batch/sec with the two stored procedure.

This is the result with the Temp Table

16 Log Flushes per second and around 650Kb/sec of Tlog traffic.

On the contrary with the Table Variable we have

Almost half the Log Flushes/sec ( 8 ) and the Log Bytes Flushed /sec more than halved at 300Kb/sec.

So, from a practical point of view, when we can’t enable the InMemory feature, it is more useful to start playing with the table variables, particularly when we work with a small number of rows and several calls per minute (or second); and when the performance favors Temp Tables, and the rate is not so high, a RECOMPILE in the statement should eliminate any difference.